Abstract

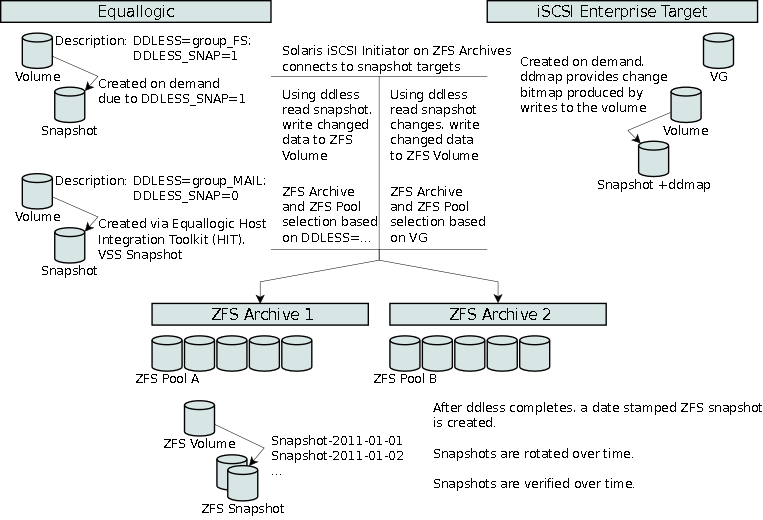

This document provides details on integrating an iSCSI Portal with the Linux iSCSI Enterprise Target modified to track data changes, a tool named ddless to write only the changed data to Solaris ZFS volumes while creating ZFS volume snapshots on a daily basis providing long-term backup and recoverability of SAN storage disks.

Preface

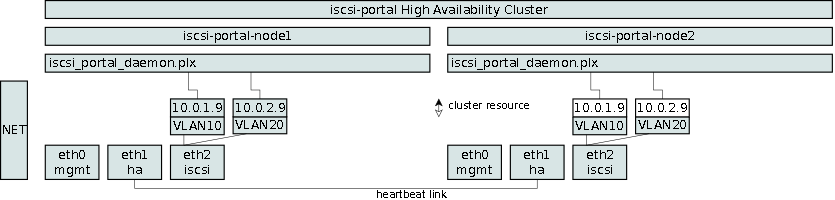

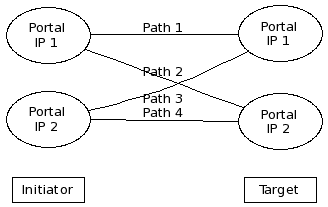

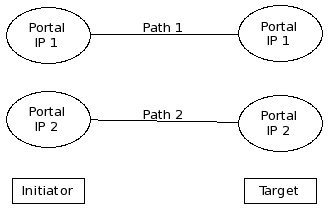

The iSCSI initiators connect with the iSCSI Portal providing connection redirection services in a round-robin fashion to available iSCSI Enterprise Target hosts featuring multiple Ethernet interfaces. The iSCSI Enterprise Target provides virtual disk services to iSCSI initiators. As iSCSI initiators write data to the underlying logical volumes using Linux LVM (Logical Volume Management), the iSCSI Enterprise Target tracks these changes in a ddmap which is a binary map indicating which 16 KB segments have been written to.

A tool named ddless can read either complete logical volumes or utilize the ddmap data reading only those 16 KB segments that have been written to and then write the actual changes to Solaris ZFS volumes. Only during the backup process are LVM snapshots utilized allowing iSCSI Enterprise Target to perform at its peak. By transmitting incremental changes we reduce the backup time.

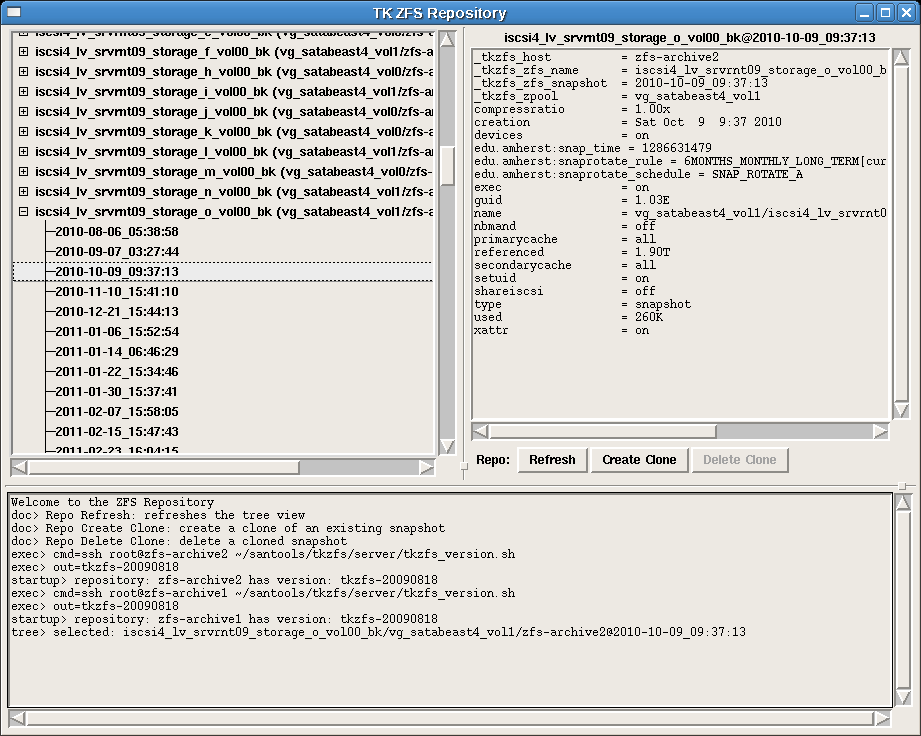

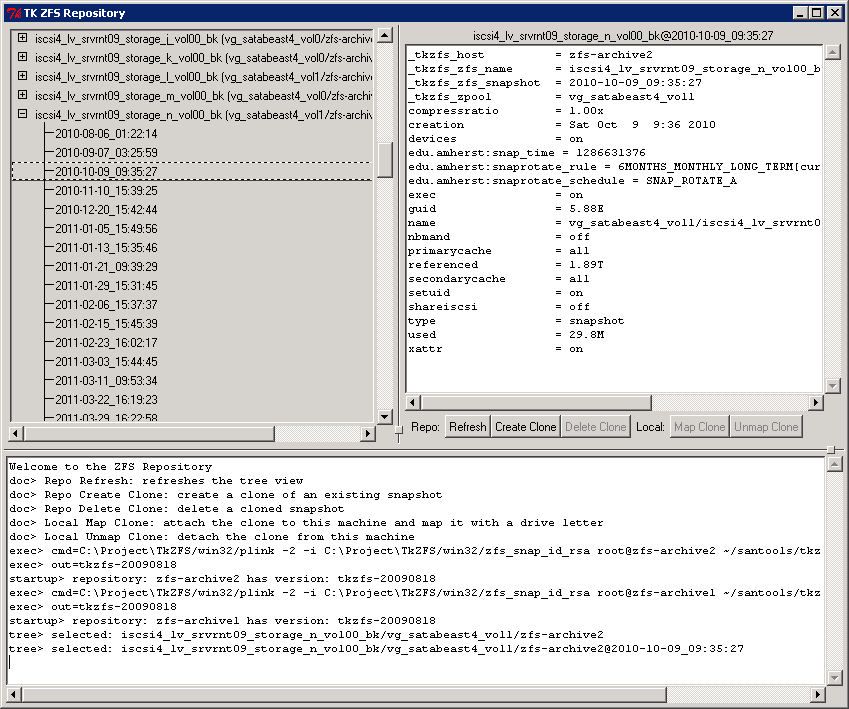

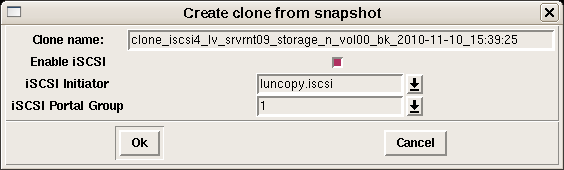

Once the backup process completes, a Solaris ZFS volume snapshot is established. Customizable ZFS volume snapshot rotation can be configured providing, for example, daily backups for several weeks and weekly backups for several months. A GUI tool called TkZFS has been written to manage the Solaris ZFS volumes and snapshots. TkZFS allows the operator to create clones of Solaris ZFS snapshots which can be exposed to remote hosts for complete or partial system restoration.

Production Information

As of April 2011, we provide 80 TB of iSCSI storage (>50% utilized), configured 92 targets each consisting of a single LUN (logical unit). On Solaris ZFS we currently maintain 77 volumes from the iSCSI Enterprise target and 42 volumes from the Equallogic storage. 9021 ZFS snapshots are in place to provide restorability of logical volumes for up to one full year.

The system has been developed over several years, it was put into production June of 2010.

How to read this document

Read it all. The more complex answer is it depends on the reader and how technically involved the reader is with SAN terminology. However, as the author has also learned, things can be learned sometimes by reading other books or by immersion - it is possible.

It is recommended to read the "Amherst College SAN History" chapter first, then followed by at least the "Architecture Overview" sections of all chapters, such as iSCSI Target, iSCSI Portal, ddless and ddmap and the ZFS Archive. Then as interest develops the reader can dive into each chapter in more detail.

Some chapters, such as the DDMAP Kernel Reference, are only needed when studying the low level implementation of ddmap and debugging ddmap.

Acknowledgments

I would like to acknowledge the many contributors of the iscsi-target-development mailing list and specifically Ming Zang, Arne Redlich and Ross Walker who have consistently helped many mailing members with complex storage problems.

I would also like to acknowledge members of the departments "Systems and Networking Group" and "Computer Science" of Amherst College for aiding us with the concepts and implementation the project described within this document.

How this document was prepared

This documentation was prepared using AsciiDoc, GraphViz, Dia and Gimp.

Amherst College SAN History

StoneFly

We have initially worked with iSCSI storage using the vendor StoneFly which was introduced by Brandon Stone at Amherst College between 2003 and 2004. Some storage was provisioned using the StoneFly equipment for some of our Windows file servers. The StoneFly device was connected to RaidWeb disk arrays which consume IDE disks and expose a RAIDed SCSI disk channel. The StoneFly device provides iSCSI targets and LUNs. We have learned over time that the RaidWeb boxes can pass along disk errors as SCSI bus timeouts making them unreliable. At that time StoneFly did not provide any SAN backup or replication facility at that time.

The author would like to acknowledge Brandon Stone’s commentary. He said to me in the server room one day that I would be involved in open-source iSCSI. Back in the days (as some know this phrase), I honestly had no idea what paths I would be traveling. This document is the result.

Equallogic

In 2005 Amherst College decided to look for a commercial vendor for SAN disk storage. Several vendors were considered and with the strong influence of smart product and vendor selection, David Irwin advised us to choose the Equallogic, which is now part of Dell and we happen to be a Dell shop. The Equallogic has grown currently to about 21 TB of storage provisioned mainly for Exchange mail, file servers, CMS and VMware virtual machine hosts.

The purchase of the Equallogic also triggered the purchase of another product called FilesX which promised to aid with the fast backup of file and Exchange servers. Alternative options for Equallogic backup is to use Equallogic replication requiring further Equallogic devices at the remote site increasing the costs substantially.

FilesX required a destination disk device to write the backup data. We invested in less expensive storage using NexSAN’s fiber-channel based AtaBeast and then later SataBeast. The SataBeasts provide generally 32 TB of storage using 42 disks.

The FilesX product was operated by David Irwin. The product was a Windows only product and installed disk input/output drivers on the hosts that it was backing up. The driver would track the changes written to disk and later deliver those changes to the FilesX server. One of the inherent problems is that a reboot caused a complete disk read and transfer to the FilesX server. We have found the performance of those reads to be between 1 and 3 MB/s. The Equallogic can provide much better performance than that. The FilesX vendor advised us to use a SAN mode bypassing the host for the full reads, however that did not help in terms of performance.

We spent some time with the FilesX vendor and determined that they configured their transfer mechanism to read using 16 KB blocks. After some investigation of disk transfers between the Equallogic and a Linux host, we determined that the amount of data a process reads at once matters. Reading using 16 KB block sizes results in poor performance. Reading using 1MB block sizes, we can see about 80-90 MB/s, for example. The vendor was advised to change their algorithm to read in 1MB block sizes and process in 16 KB increments when processing a full read. The vendor did not provide a fix.

At this time we have started to backup some of the Equallogic data using a linux host as an agent to transfer data. The iSCSI initiator on CentOS 4 was used to read the data from the Equallogic snapshot and write the data to the SataBeast on an LVM logical volume. The command then used was:

dd if=/dev/sda of=/dev/vg_satabeast1_vol0/lv_nfs_bk bs=1M

Later on it became apparent that Linux was buffering everything it was reading hence wasting considerable time and keeping our transfer speeds at 50MB/s. The SataBeast is able to write around 160 MB/s. We have determined that if you read from a raw device in Linux you can bypass the buffering and we finally have speeds of around 100MB/s. We have also found that the optimization of the Ethernet driver matters. For example, with the e1000 driver (Intel Ethernet) we had to use the latest source code and optimize the load options:

options e1000 InterruptThrottleRate=1,1 FlowControl=3,3 RxDescriptors=4096,4096 TxDescriptors=4096,4096 RxIntDelay=0,0 TxIntDelay=0,0

With FilesX reading a disk at 1-3 MB/s versus Linux reading the same disk at 100 MB/s was a problem. David Irwin spent countless hours trying to get through the full backups after a maintenance reboot of all Exchange servers for several weeks. File servers were initially assigned to the FilesX backup software, however systems like file servers provide over 6 TB of data and FilesX’s performance became a bottleneck.s

iSCSI Enterprise Target

In the year 2005, we have started to look at open-source iSCSI target software. Several projects were considered, among them were: iet (iSCSI Enterprise Target), scst (Generic SCSI target subsystem). We initially used fiber-channel with scst since that was the supported transport protocol at that time. The iSCSI transport protocol was added using some of the source code from iet later on. There was a general consensus that we did not want to provision fiber-channel to every system.

The host iscsi-target1 was born, providing 1.5 TB of storage, using the iet project. Looking back, the implementation was crude and had a lot of issues: we had no target configuration automation, we had no clustering of two nodes in case one died and no network interface redundancy and no backups other than file level backups.

The target configuration automation process was helped along with scripts that would allow an operator to specify the configuration of a target and LUN in a text file. The text file was processed updating the running iet environment and static config files. The earliest external reference to this project can be found at http://sourceforge.net/apps/mediawiki/iscsitarget/index.php?title=Easy_management.

The host iscsi-target1 functioned well while it was operating, however, things like software maintenance were painful, since the system restart took well over 2 minutes. A SAN outage of over 2 minutes is not an acceptable event.

In 2006, we decided to fix the short-comings of iscsi-target1. We designed iscsi-target3 (iscsi-target2 was a non-hot spare for iscsi-target1).

The backing store for iscsi-target3 was a fiber-channel based AtaBeast (now SataBeast) by NexSan. We used the Linux heartbeat project for clustering and let it manage the LVM volume group resource, the IP addresses for several VLANs and the iet service itself. A detailed write up about this setup is provided at https://www3.amherst.edu/~swplotner/iscsitarget-2006/.

The issue of block-level backup was also addressed and modified over the years. The initial implementation used dd (a Unix tool to convert and copy data) to transfer data blocks from a source SataBeast to a destination SataBeast located in another building connected via fiber-channel. The data transfer was performed for each actively used LVM logical volume utilizing a logical volume snapshot during the time of backup.

In terms of backup, we are not interested in a constant hot copy using DRBD (Distributed Replicated Block Device), but rather a copy that originated from snapshot back in time. At this time, a single backup instance was sufficient, however 5 years later that has changed.

Toolbox dd, ddless and ddmap

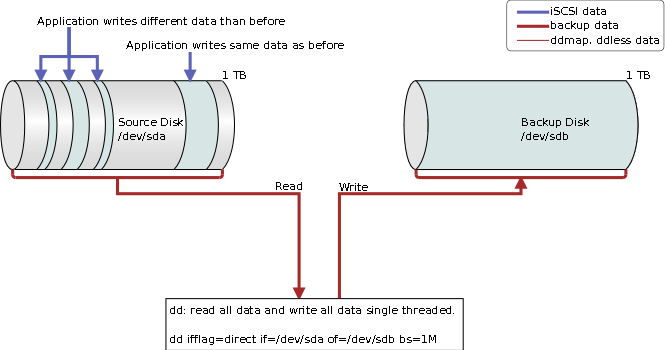

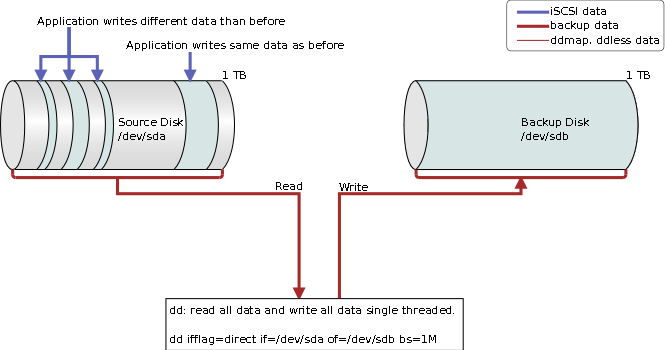

We have discussed several ideas of how to backup logical volumes consuming terabytes of data. The standard dd tool has basically two problems: it reads and it writes. Let’s rephrase this: it reads all the data and writes all the data.

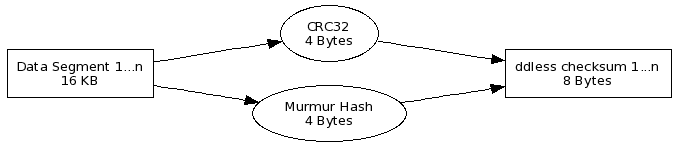

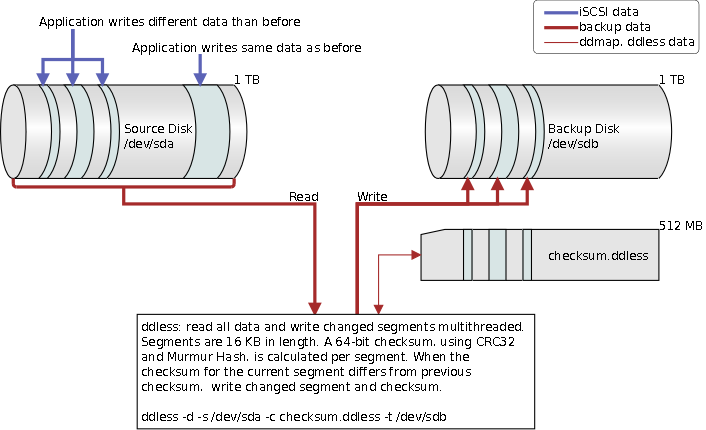

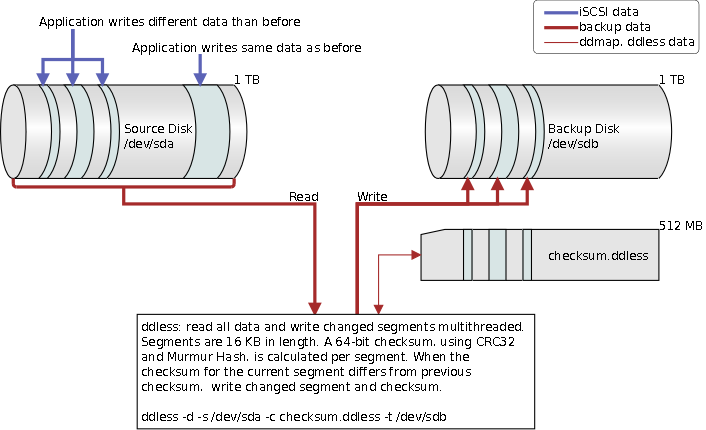

We decided to work on the write problem first, because writing the same over and over is a waste and also causes unnecessary high load average on Linux systems. We designed a tool called ddless which would only write those 16 KB segments that had not changed. Given a 16 KB segment, we compute a checksum and store it in a ddless checksum file. The segment is only written back to the disk if its checksum differs from the existing ddless checksum. If the ddless checksum does not exist, we write all segments and compute their checksums.

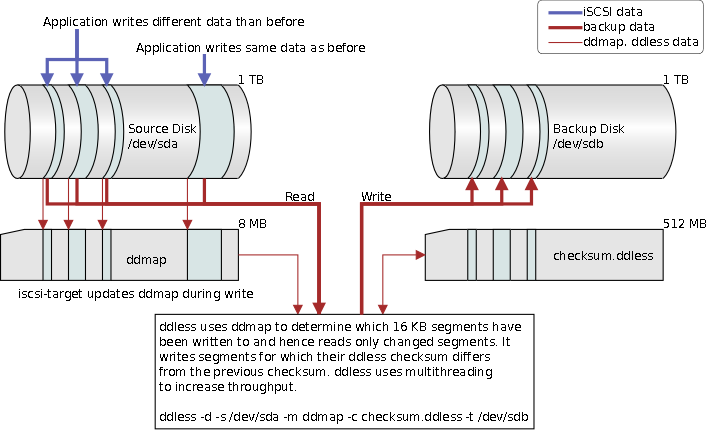

We have used ddless for several years with iscsi-target3. A few years later we decided to work on the read issue. Reading the same data over and over is also a waste. If we could only know what has changed.

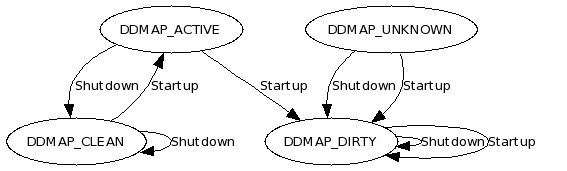

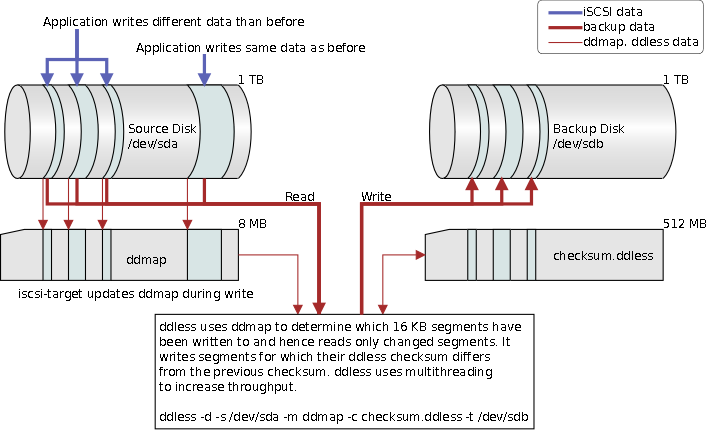

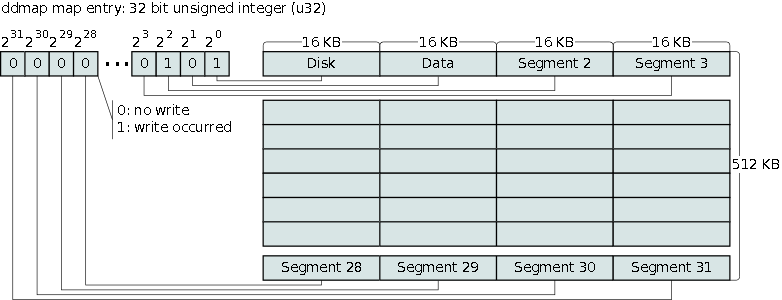

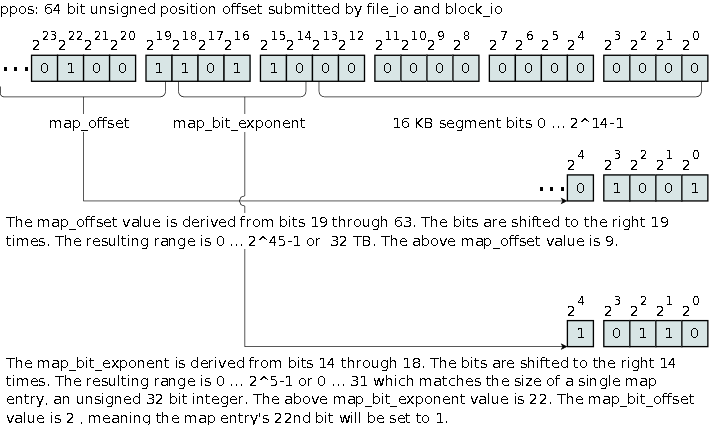

We are aware of LVM snapshots and their write performance issues, so tracking long-term data changes using LVM snapshots is not feasible. A different solution is to create a bitmap where each bit tracks a 16 KB segment. If the segment got written to we flip the bit to 1. When we want to know what has changed, we look at the bits of the bitmap with the value 1, read the related data and then clear the map. We have called these bitmaps ddmap and they indicate which data segments contain changes that must be read. A LVM snapshot is utilized to read the disk’s data in conjunction with the ddmap bitmap. The snapshot lasts for the duration of the backup only.

The command line tool ddless has been modified to support ddmap indicating which segments need to be read. The ddmap feature is implemented in the block_io and file_io layer of the iscsi-target server. The ddmap data can be managed via the /proc/net/iet/ddmap/ file system. More details on this in later sections.

The ddless/ddmap integration allows us to backup changes of 32 TB LVM system in about 2 hours instead of several days. This is of course work load dependent. If a process decides to make changes to each and every data block, then we will spend several days backing up the data.

Keep in mind that the idea of ddmap was not created until after we had tested Sun’s ZFS zfs send/receive functionality. That worked flawlessly, except that ZFS volume backed block devices have inconsistent read and write performance which made Sun’s ComStar + ZFS combination a non-viable iscsi-target to present real-time video streams, for example.

iSCSI Target (iscsi-target)

Architecture Overview (iscsi-target)

The iscsi-target name refers to a collection of services, such as the heartbeat clustering, volume group resources, the iSCSI Enterprise Target, and networking configuration.

Heartbeat Cluster

Earlier implementations of the iSCSI Enterprise Target (IET) on our systems utilized the heartbeat cluster software for fail-over purposes. The design was limited to an active/passive node setup with maximum of two nodes. The current design allows for n nodes to be active.

LVM Volume Group Resources

A LVM volume group can only be active on a single node. The physical volumes of the volume group must be visible to all nodes which can be implemented using fiber-channel host bus adapters (HBA) or a shared SCSI bus provided each HBA does not reset the bus during boot.

Each volume group resource is expected to have a logical volume named lv_iscsi_target. This logical volume is formatted using the ext3 file system and is mounted in conjunction with the volume group resource activation. It contains all IET meta data of logical volumes of the current volume group. This feature specifically allows volume groups to be moved from node to node during maintenance or failover events.

The heartbeat resource group configuration provides support for ordered resources. In this case a resource group is configured consisting of a volume group and a file system. On start-up the volume group is activated followed by mounting the file system; on shut-down the file system is dismounted before the volume group is deactivated.

iSCSI Enterprise Target (IET)

Each LVM volume group consists of logical volumes to be configured as LUNs for use by iSCSI Enterprise Target (IET). IET configuration files are by default located under /etc/iet. This location is a node specific location. Since a volume group can migrate from node to node, the target and LUN configuration in ietd.conf cannot be the same for each node. A node that does not hold a volume group resource would not be able to open the referenced logical volumes since they are not mapped by the device mapper.

The lv_iscsi_target device is mounted under /mnt/iscsi-target/vg_name#_vol#/ and consists of IET configuration components such as ietd.conf, initiators.allow and targets.allow. When the iscsi-target services are started, the IET configuration components from each active volume group are are combined into a single IET configuration stored under /etc/iet.

-

ietd.conf contains target and associated LUN configuration

-

initiators.allow controls target initiator access

-

targets.allow controls target network access

Networking

Our networking environment requires us to support iSCSI services in multiple VLANs. Earlier implementations of the IET project provisioned bonded/ether-channeled network interfaces to improve bandwidth and throughput. To support operating system with multipath requirements bonded interfaces are not best practice because the resulting path and traffic layout is possibly not balanced.

The current design supports multiple physical Ethernet interfaces, divided into sub-interfaces consisting of 802.1q tagged VLANs. A given VLAN can carry multiple subnets. Each virtual interface is assigned an IP address from the subnet it services. Having multiple IP addresses from a common subnet on separate interfaces in one host requires special network configuration options such as ARP filter and source-routing. The alternative solution is to create subnet per network interface. We believe, however, that this will create more complexity on each host involved with storage networking.

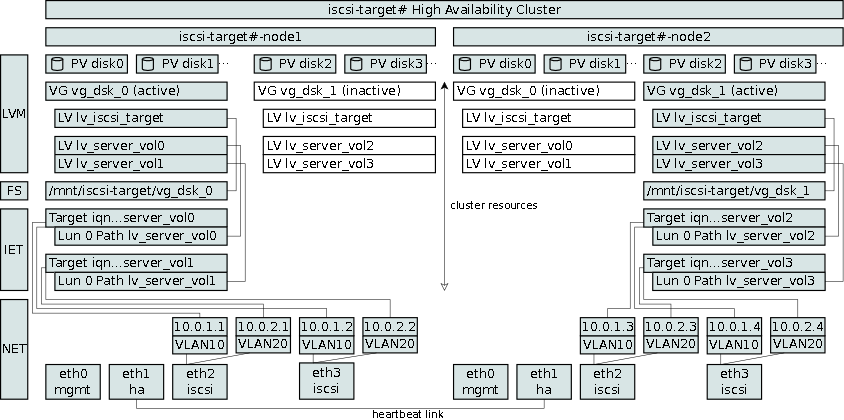

The architecture overview figure presents a cluster state where vg_disk_0 is active on node1 and inactive on node2, similarly vg_disk_1 is active on node2 and inactive on node1. Each node provisions iSCSI targets and LUNs. On node1, the logical volume lv_server_vol0 is served under the target iqn…server_vol via the interface IP addresses 10.0.1.1 and 10.0.1.2, assuming class C addresses in the same subnet. Notice, that if the volume group vg_dsk_0 becomes active on node2, the target’s IP addresses will change to 10.0.1.3 and 10.0.1.4.

|

|

It should become clear that an initiator will loose connectivity with the target when volume group resources migrate. |

An iSCSI Portal can solve the above problem. Before describing the architecture of the iscsi-portal, we need to understand the standard iSCSI Discovery and Normal sessions.

iSCSI Discovery and Normal Session

This section highlights the iSCSI Discovery and Normal session establishment. For complete details, refer to RFC 3720 [rfc3720].

The iSCSI initiator is the entity that desires to see a device. An iSCSI target is the entity that provisions the device to an initiator. The communication channel is established using TCP and the commonly used port number 3260. The endpoints of that communication channel are called network portals on both the initiator and the target.

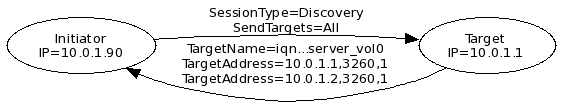

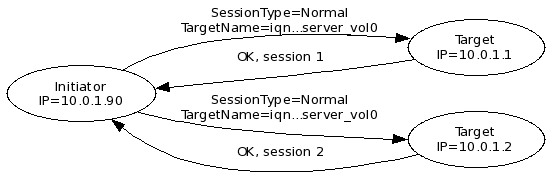

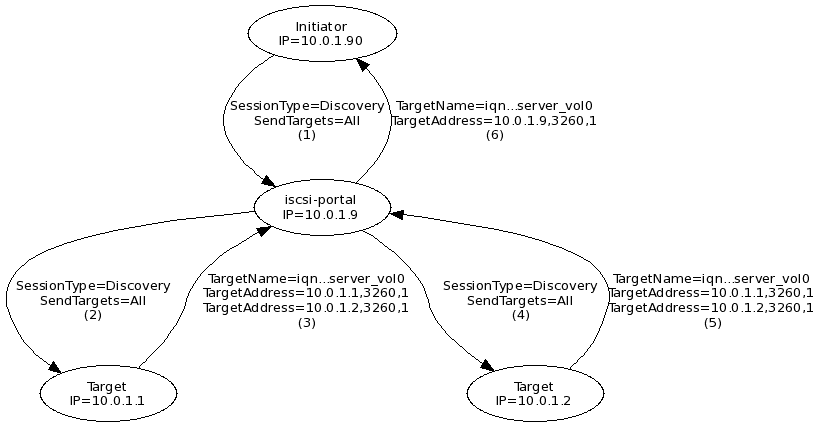

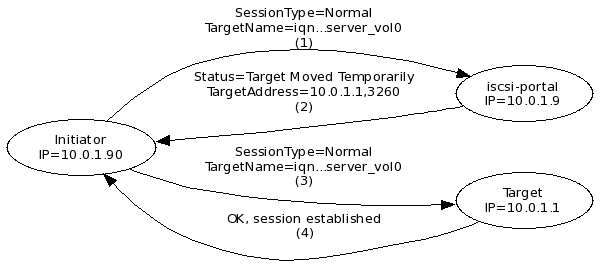

Before the initiator can access a target, the initiator discovers targets automatically or manually. During target discovery, the initiator establishes a discovery session with a network portal and queries it for known targets. The response from the target is a list of targets and their associated IP addresses. At this time the initiator closes the discovery session and initiates a normal session with the discovered target with the intend to obtain LUNs which can be disk devices from the discovered target.

The following figure demonstrates the Discovery session between an initiator with the IP 10.0.1.90 and the target network portal on VLAN10 with the IP 10.0.1.1.

Because the target is configured to use two network portals, 10.0.1.1 and 10.0.1.2, the initiator establishes multiple connections resulting in multiple identical disk devices of lv_server_vol0 at the initiator. The initiator requires multipath software and configuration.

The initiator stores the original TargetAddress for each connection. In this context the TargetAddress is also called a portal address. The initiator uses the portal address, for example, to re-establish a session after the network cable was cut and fixed again.

|

|

Initiators that interact with the standard iSCSI Enterprise Target (IET) follow the above described discovery and normal sessions sequences. Use the iscsi-portal to control target portal address provisioning. |

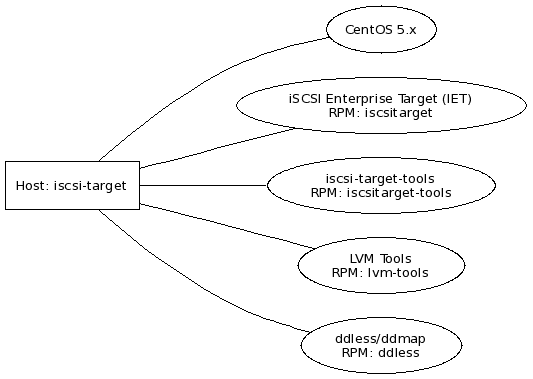

Software Packages

The iscsi-target software packages are depicted in the following diagram:

The iscsi-target host is identified with a box shape while software components are identified with an ellipse shape. Each software component consists of a software component name, which is commonly used in this documentation, followed by a RPM (RedHat Package Management) package name.

The following list presents package names providing a short description of the components:

- iscsitarget

-

The iSCSI Enterprise Target software. Configuration files are found under /etc/iet and are managed by the iscsitarget-tools package.

- /etc/iet/

-

- ietd.conf

-

Generated IET daemon configuration. The content of this file depends on the active volume groups of the current node.

- initiators.allow

-

Defines on a per target basis which initiators are allowed to connect. The rules we employ here are based on the initiator name and not the initiator IP, since it is common for a single initiator to have multiple IP addresses.

- target.allow

-

Defines which interfaces a target is exposed to.

- ietadm

-

command line interface to facilitate IET daemon management

- /proc/net/iet/

-

- session

-

contains listing of targets and associated sessions

- volume

-

contains listing of targets and associated volumes (LUNs)

- reservation

-

contains listing of targets and associated reservations

- vpd

-

contains targets and associated Vital Product Data (VPD) information

- ddmap

-

contains ddmap data of logical volumes provisioned by targets

- iscsitarget-tools

-

support tools for target cluster configuration located under /etc/iscsi-target

- ietd_cluster.sh

-

iscsi-target cluster service control script. Allows you to start and stop the iscsi-target services across the entire cluster.

- ietd_config.sh

-

configuration of iscsitarget-tools

- ietd_ddmap.sh

-

manages the state changes of ddmap data during startup and shutdown. You can query the status of the ddmap state of each logical volume.

- ietd_iptables.sh

-

manages the iptable rules during startup and shutdown of the iscsi-target services.

- ietd_routing.sh

-

manages the source routing for multiple interfaces that share IP addresses from a common subnet.

- ietd_service.sh

-

callback script with hooks for pre- and post- iscsi-target service startup and shutdown.

- ietd_targets.sh

-

manages the target and LUN configuration defined in targets.conf. Targets can be flagged online or offline, targets can be added, LUNs can be resized online.

- ietd_vg.sh

-

manages the mount point directories for volume group meta data like IET configuration and ddmap state during system startup.

- iet-tools.plx

-

command line tool to support target operations for ietd_targets.sh.

- iostat_top.sh

-

top like tool to watch disk IO of logical volumes.

- lvm_cluster.sh

-

a logical volume manager cluster tool that selects the proper node when executing specifically recognized commands like lvcreate, lvextend, lvremove, for example. Each command references a specific volume group, the tool chooses the node with the active volume group.

- session_top.sh

-

top like tool to watch and track the number of sessions per target.

- targets.conf

-

configuration file for all targets and LUNs and other meta data.

- targets_update.sh

-

triggers the ietd_targets.sh management for all configured targets within a cluster.

- zfs_tools.sh

-

zfs snapshot management interface.

- lvm-tools

-

support tools for LVM management

- /etc/lvm/lvm_tools.conf

-

configuration of the LVM tool chain.

- lvm_copy

-

copy block device contents from a source to target, the source and target can be file, logical volume or other block device.

- lvm_devsize

-

tool to determine block device size

- lvm_iostat

-

performance gathering tool for block devices

- lvm_snap_copy

-

utilizes lvm_snap_create, lvm_copy, and lvm_snap_remove.

- lvm_snap_create

-

creates a snapshot of a logical volume allocating a percentage of the origin volume

- lvm_snap_remove

-

removes a snapshot

- ddless

-

- ddless

-

copies block device data from a source to a target. Employs ddless checksums to reduce writes of identical data and uses ddmap data to reduce reads from the source device.

- ddmap

-

reads and combines multiple ddmap sources into a single ddmap file.

Our current iscsi-target nodes utilize CentOS 5 64-bit as the operating system. Ensure that the operating system updates have been installed.

Use the following commands to install the above packages listed above.

[root@iscsi-target-node1 /]# yum install iscsitarget

[root@iscsi-target-node1 /]# yum install iscsitarget-tools

[root@iscsi-target-node1 /]# yum install lvm-tools

[root@iscsi-target-node1 /]# yum install ddless

General System Preparation

The boot process of a system normally starts system services such as iscsi-target, however, since the services are clustered, we need to disable the iscsi-target services. Heartbeat manages the startup and shutdown of the iscsi-target services.

The heartbeat service should also be disabled because some of the configured resources require specific mount points to be created, we have automated that part during startup.

The following commands should be executed to configure the required system boot state:

[root@iscsi-target-node1 /]# chkconfig iscsi-target off

[root@iscsi-target-node1 /]# chkconfig heartbeat off

#

# disable volume groups

#

vgchange -an

#

# create iscsi-target volume group data mount points (iet, ddmap

# configuration files). Each volume group has logical volumes and

# for each of those there is configuration information for iet

# ddmap. These mount points MUST exist BEFORE the cluster starts!

#

/etc/iscsi-target/ietd_vg.sh

#

# then start heartbeat (this guarantees that we don't have the vg open

# on two nodes during heartbeat's startup), also make sure that since

# heartbeat is in the off state (chkconfig), it MUST be added otherwise

# it won't be shutdown during reboot(!): chkconfig --add heartbeat

#

service heartbeat start

The /etc/hosts file should be populated with all cluster node names, in case DNS experiences a failure condition.

127.0.0.1 localhost.localdomain localhost 192.168.0.1 iscsi-target-node1.amherst.edu iscsi-target-node1 192.168.0.2 iscsi-target-node2.amherst.edu iscsi-target-node2

The /etc/sysconfig/iptables contains the iptables configuration and it should by default block all incoming traffic except for the following services:

-

ssh, tcp port 22

-

iscsi, tcp port 3260

-

cluster, udp 694

The iscsitarget-tools has scripts that monitor the changes in the volume and session files under /proc/net/iet. A cron job needs to be setup to actively monitor the state changes.

# # ietd state diff # */5 * * * * root /etc/iscsi-target/iet-tools.plx -c state_diff > /dev/null 2>&1

Since an operator is notified via email as state changes occur, you should also configure the operator’s email address in /etc/iscsi-target/iet-tools.conf.

IO Scheduler

It is recommended practice to change the underlying disk scheduler from cfq to deadline.

Make sure you are using the deadline scheduler on the underlying block devices because the cfq scheduler doesn’t work correctly with IO threads spawned from the kernel.

— Ross Walker

The disk queue scheduler can be configured on a per block device using the following command:

echo deadline > /sys/block/sd?/queue/scheduler

It can also be globally configured as a kernel boot parameter configurable in /etc/grub.conf:

title CentOS (2.6.18-194.26.1.el5)

root (hd0,0)

kernel /vmlinuz-2.6.18-194.26.1.el5 ro root=/dev/vg_sata0/lv_os_root elevator=deadline

initrd /initrd-2.6.18-194.26.1.el5.img

Subsequent kernel updates retain the setting in grub.conf.

Logical Volume Management (LVM)

LVM is configured by default to look at all disks for LVM data structures. This can be problem, if a LUN provisioned to another host also maintains LVM data structures. After some period of time, LVM on the iscsi-target detects these nested data structures and allocates device mapper entries for logical volumes that are in use by another host.

The LVM configuration at the iscsi-target should filter devices and only look at devices that are part of the managed volume groups. In our implementation we are looking at devices that originate from the multipath daemon and any other devices except the root file system device are ignored.

The /etc/lvm/lvm.conf contains a filter setting that controls which devices LVM considers. Keep in mind that you can only have a single filter setting. You must create a single regular expression that works for the entire system. In our case want to accept the local disk partition 2 which contains LVM, accept devices from the multipath daemon and reject everything else:

filter = [ "a|/dev/sda2|", "a|/dev/mapper/mpath.*|", "r|.*|" ]

Multipathing devices for use by LVM

It is highly recommended to implement multiple data paths to the disks that make up the volume groups. Configuration details of multipath can be obtained via the man page multipath.conf and also via http://sources.redhat.com/lvm2/wiki/MultipathUsageGuide.

A multipath default policy needs to be established in the file /etc/multipath.conf:

defaults {

polling_interval 5

selector "round-robin 0"

path_grouping_policy multibus

path_checker tur

# lower this until reads are smooth on the graphs (default 1000)

rr_min_io 16

rr_weight uniform

failback 10

no_path_retry fail

user_friendly_names yes

}

The polling_interval is kept at the suggested default value of 5 seconds. The selector value "round-robin 0" is the only currently implemented path selector. The path_grouping_policy is multibus, indicating that all paths are in one priority group. The path checker uses the SCSI test unit ready (tur) command to verify the availability of a device. Path switches occur after 16 IO operations. Note, that this has to be tuned according to the backend devices. Since each path has the same priority we choose the rr_weight value to be uniform. Once a failed path becomes available again, defer its acceptance by 10 seconds. If a path has failed, the no_path_retry value of fail, indicates to not queue any data on that path. The user_friendly_names provide more manageable device entries.

The multipath.conf file contains blacklist and device sections which need to be adjusted according to your environment. In our case, we are utilizing NexSAN SataBeasts and the devices appear properly.

The multipaths section allows you to assign user friendly names to the WWIDs of LUNs coming from the SataBeasts. A sample section:

multipaths {

#

# mpath_vg_dsk_00

#

multipath {

wwid 36000402003fc407163ac25d900000000

alias mpath_vg_dsk_00

}

# more...

}

The WWID is obtained by interrogating each block device using the following command:

[root@iscsi-target-node1 /]# /sbin/scsi_id -g -u -s /block/sdb

36000402003fc407163ac25d900000000

The device mapper assigns in conjunction with the multipath daemon the following persistent device path /dev/mapper/mpath_vg_dsk_00 which must be used when creating the physical volume and subsequent volume group.

[root@iscsi-target-node1 /]# pvcreate /dev/mapper/mpath_vg_dsk_00

[root@iscsi-target-node1 /]# vgcreate vg_dsk_00 /dev/mapper/mpath_vg_dsk_00

Enable the multipath service and observe the multipath status:

[root@iscsi-target-node1 /]# chkconfig multipathd on

[root@iscsi-target-node1 /]# service multipathd start

[root@iscsi-target-node1 /]# multipath -l

mpath_vg_dsk_00 (36000402003fc407163ac25d900000000) dm-3 NEXSAN,SATABeast

[size=1.4T][features=0][hwhandler=0][rw]

\_ round-robin 0 [prio=0][active]

\_ 4:0:4:0 sda 8:16 [active][undef]

\_ 3:0:4:0 sdb 8:32 [active][undef]

A device can be removed from the linux kernel via the following command.

[root@iscsi-target-node1 /]# echo 1 > /sys/block/sdX/device/delete

This is useful at times when removing paths from a multipath device. More details can be found at http://www.softpanorama.org/Commercial_linuxes/Devices/multipath.shtml.

Networking Configuration

Several network interfaces require specific configuration features:

-

management

-

heartbeat

-

iSCSI

The management and heartbeat interfaces should be in different VLANs and subnets and can be configured with static IP addressing.

The interfaces that expose iSCSI traffic, however, require more attention. On the switch side of the iSCSI interface it is generally recommended to support large MTUs and ethernet flow control send and receive support. The following is an example of a CISCO configured switchport for a single VLAN carrying iSCSI traffic:

interface GigabitEthernet9/16 description iscsi-target-node1-port0 switchport switchport access vlan 10 mtu 9216 flowcontrol receive on flowcontrol send on spanning-tree portfast

If the iSCSI target provides services to multiple VLANs carrying iSCSI traffic use the following configuration which provides a trunk interface pruned to service specific VLANs:

interface TenGigabitEthernet6/1 description iscsi-target-node1-port0.trunk switchport switchport trunk encapsulation dot1q switchport trunk allowed vlan 10,20 switchport mode trunk switchport nonegotiate mtu 9216 flowcontrol receive on flowcontrol send on spanning-tree portfast trunk

ARP Filter

Supporting multiple IP addresses from a common subnet on different network adapters on a single host has by default ARP and routing issues in Linux because the kernel believes that IP addresses are owned by the host and not the interface.

|

|

The following section presents a solution to the ARP and routing issue, however, there might be other ways to solve this. |

The following is an excerpt from the kernel documenation/ip-sysctl.txt file in regards to arp_filter.

arp_filter - BOOLEAN

1 Allows you to have multiple network interfaces on the same subnet, and have the ARPs for each interface be answered based on whether or not the kernel would route a packet from the ARP’d IP out that interface (therefore you must use source based routing for this to work). In other words it allows control of which cards (usually 1) will respond to an arp request.

0 - (default) The kernel can respond to arp requests with addresses from other interfaces. This may seem wrong but it usually makes sense, because it increases the chance of successful communication. IP addresses are owned by the complete host on Linux, not by particular interfaces. Only for more complex setups like load- balancing, does this behaviour cause problems.

arp_filter for the interface will be enabled if at least one of conf/{all,interface}/arp_filter is set to TRUE, it will be disabled otherwise

— LWN

ARP filtering can be configured using /etc/sysctl.conf to ensure the change is persistent after a reboot.

net.ipv4.conf.all.arp_filter = 1

Routing based on Source IP Address

Standard routing rules are evaluated using a single routing table. The routing table selection is made using the destination IP address.

To implement routing based on source IP address, we need to establish new routing tables and routing rules. Routing rules can be added to control routing, for example, by source IP address. Refer to the man ip for more information. Routing rules can invoke the evaluation of a routing table, hence we create a distinct routing table per managed iSCSI interface.

|

|

Linux supports more than one routing table. The standard route command manipulates the main routing table. |

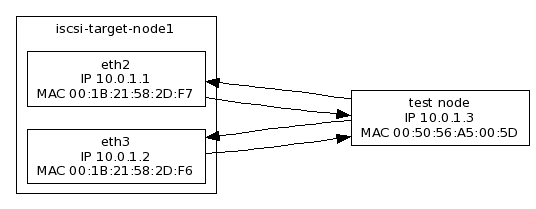

To aid the iscsi-target network portal configuration and source routing requirement stated above, we present the network interface configuration:

# Intel Corporation 82598EB 10-Gigabit AF Dual Port Network Connection DEVICE=eth2 BOOTPROTO=none HWADDR=00:1B:21:58:2D:F7 ONBOOT=yes MTU=9000

VLAN=yes DEVICE=eth2.10 BOOTPROTO=static ONBOOT=yes IPADDR=10.0.1.1 NETMASK=255.255.255.0 IET_PORTAL_GROUP=pg_vlan10_fabricIET_ROUTE_TABLE=rt_vlan10_port0

# Intel Corporation 82598EB 10-Gigabit AF Dual Port Network Connection DEVICE=eth3 BOOTPROTO=none HWADDR=00:1B:21:58:2D:F6 ONBOOT=yes MTU=9000

VLAN=yes DEVICE=eth3.10 BOOTPROTO=static ONBOOT=yes IPADDR=10.0.1.2 NETMASK=255.255.255.0 IET_PORTAL_GROUP=pg_vlan10_fabricIET_ROUTE_TABLE=rt_vlan10_port1

| The IET_PORTAL_GROUP must be consistent for interfaces servicing a specific fabric or iSCSI subnet. This tag is utilized by ietd_targets.sh script and identifies network portal membership of a configured target. | |

|

The IET_ROUTE_TABLE entry must unique for each fabric or iSCSI subnet port because specific source routing configuration is required on a per IP/port assignment basis. This tag is utilized by the ietd_routing.sh script and enables IP source routing.

The IET_ROUTE_TABLE value is a symbolic name that requires a numeric translation. The numbers refer to routing table rules and are configured in /etc/iproute2/rt_tables. Refer to man ip for more detailed information. The assignment of the numeric routing table ID is arbitrary, we chose to start with the number 10. /etc/iproute2/rt_tables

# # reserved values # 255 local 254 main 253 default 0 unspec # # amherst: routing table numbers, managed by /etc/iscsi-target/ietd_routing.sh # # iscsi 10 rt_vlan10_port0 11 rt_vlan10_port1 12 rt_vlan11_port0 13 rt_vlan11_port1 |

Both of the above configuration items IET_PORTAL_GROUP and IET_ROUTE_TABLE are enhancements added to the network configuration. It does not impact the standard linux network configuration, however, the iscsi-target-tools utilize these configuration values.

The source routing is configured by the ietd_routing.sh script which enumerates all ifcfg-eth* scripts looking for IET_ROUTE_TABLE names, IPADDR, and NETMASK. The values of these variables are used to produce the new routing tables and routing rules.

The cluster services normally invoke the startup of the iscsi-target services and hence the ietd_routing.sh script. It is recommended during initial configuration to manually start the ietd_routing.sh script via:

[root@iscsi-target-node1 /] cd /etc/iscsi-target

[root@iscsi-target-node1 /]./ietd_routing.sh start

[root@iscsi-target-node1 /]./ietd_routing.sh status

ip route show table rt_vlan10_port0  10.0.1.0/24 dev eth2 scope link src 10.0.1.1

ip route show table rt_vlan10_port1

10.0.1.0/24 dev eth3 scope link src 10.0.1.2

ip rule list

10.0.1.0/24 dev eth2 scope link src 10.0.1.1

ip route show table rt_vlan10_port1

10.0.1.0/24 dev eth3 scope link src 10.0.1.2

ip rule list  0: from all lookup 255

10: from 10.0.1.1 lookup rt_vlan10_port0

10: from 10.0.1.2 lookup rt_vlan10_port1

32766: from all lookup main

32767: from all lookup default

0: from all lookup 255

10: from 10.0.1.1 lookup rt_vlan10_port0

10: from 10.0.1.2 lookup rt_vlan10_port1

32766: from all lookup main

32767: from all lookup default

| Note the command output was initiated by ip route and the output has been abbreviated. | |

| Note the command output was initiated by ip rule and the output has been abbreviated. |

Network Configuration Validation

Once the ARP filter and source routing is working, it is time to validate the network communication channels. Prepare a host with a single network interface and IP address 10.0.1.3. It should be able to communicate with the previously configured interfaces eth2 and eth3 on VLAN 10. Note for each interface the MAC address and IP address.

The goal is to verify the ARP request/response and the subsequent IP conversation. Before testing begins, it is assumed that ARP table of the test node has been cleared:

-

The test node initiates a conversation using telnet and port 3260 and the destination IP address 10.0.1.1. The packet trace on the receiving interface eth2 should see the ARP request and response and the subsequent TCP traffic.

-

The test node repeats the above procedure with the destination IP address 10.0.1.2. The packet trace on the receiving interface eth3 should see the ARP request and response and the subsequent TCP traffic.

During the execution of the above two steps, only one of the destination interfaces should reveal packet activity.

[root@iscsi-test ~]# telnet 10.0.1.1 3260

Trying 10.0.1.1...

Connected to iscsi-target-port0 (10.0.1.1).

Escape character is '^]'.

[root@iscsi-target-node1 ~]# tcpdump -n -e -i eth1 host 10.0.1.3

23:12:59.676609 00:50:56:a5:00:5d > Broadcast, ethertype ARP (0x0806), length 60: arp who-has 10.0.1.1 tell 10.0.1.3

23:12:59.676703 00:1B:21:58:2D:F7 > 00:50:56:a5:00:5d, ethertype ARP (0x0806), length 42: arp reply 10.0.1.1 is-at 00:1B:21:58:2D:F7

23:12:59.676800 00:50:56:a5:00:5d > 00:1B:21:58:2D:F7, ethertype IPv4 (0x0800), length 74: 10.0.1.3.33147 > 10.0.1.1.iscsi-target

23:12:59.676861 00:1B:21:58:2D:F7 > 00:50:56:a5:00:5d, ethertype IPv4 (0x0800), length 74: 10.0.1.1.iscsi-target > 10.0.1.3.33147

23:12:59.677190 00:50:56:a5:00:5d > 00:1B:21:58:2D:F7, ethertype IPv4 (0x0800), length 66: 10.0.1.3.33147 > 10.0.1.1.iscsi-target

[root@iscsi-target-node1 ~]# tcpdump -n -e -i eth2 host 10.0.1.3

23:12:59.676427 00:50:56:a5:00:5d > Broadcast, ethertype ARP (0x0806), length 60: arp who-has 10.0.1.1 tell 10.0.1.3

[root@iscsi-test ~]# telnet 10.0.1.2 3260

Trying 10.0.1.2...

Connected to iscsi-target0-port1 (10.0.1.2).

Escape character is '^]'.

[root@iscsi-target-node1 ~]# tcpdump -n -e -i eth1 host 10.0.1.3

23:14:20.566939 00:50:56:a5:00:5d > Broadcast, ethertype ARP (0x0806), length 60: arp who-has 10.0.1.2 tell 10.0.1.3

[root@iscsi-target-node1 ~]# tcpdump -n -e -i eth2 host 10.0.1.3

23:14:20.566601 00:50:56:a5:00:5d > Broadcast, ethertype ARP (0x0806), length 60: arp who-has 10.0.1.2 tell 10.0.1.3

23:14:20.566784 00:1B:21:58:2D:F6 > 00:50:56:a5:00:5d, ethertype ARP (0x0806), length 42: arp reply 10.0.1.2 is-at 00:1B:21:58:2D:F6

23:14:20.566808 00:50:56:a5:00:5d > 00:1B:21:58:2D:F6, ethertype IPv4 (0x0800), length 74: 10.0.1.3.32884 > 10.0.1.2.iscsi-target

23:14:20.566858 00:1B:21:58:2D:F6 > 00:50:56:a5:00:5d, ethertype IPv4 (0x0800), length 74: 10.0.1.2.iscsi-target > 10.0.1.3.32884

23:14:20.566888 00:50:56:a5:00:5d > 00:1B:21:58:2D:F6, ethertype IPv4 (0x0800), length 66: 10.0.1.3.32884 > 10.0.1.2.iscsi-target

|

|

The above tcpdump trace have been slightly reduced to enhance readability. |

|

|

Make sure that the correct interface answers during the tests. A case to avoid is to see incoming traffic on eth3 and then outgoing traffic on eth2. Eventually, the switch processing the traffic from eth2 will broadcast the traffic to every switchport on your network! This problem is known as unicast flooding. A good reference is located at http://www.cisco.com/en/US/products/hw/switches/ps700/products_tech_note09186a00801d0808.shtml. If source routing and ARP filtering is not configured, for example, the cause of unicast flooding is due to asymmetric routing. We have experienced this problem first hand and would like to advise the reader to verify the network traces in detail. |

Network Performance Validation

To ensure reliable network throughput, interface performance must be validated. There are many tools and methodologies to test network interface performance. We are using 10 Gb/s Intel server cards with the ixbe driver.

The well-known ttcp tool works well for 1 Gb/s interfaces, however, for 10 Gb/s interfaces iperf has the required features. A single TCP connection will not fill the data pipe utilizing ttcp. With iperf you can add multiple threads to the testing process and achieve close to 9.8 Gb/s.

The server side runs:

[root@iscsi-target-node1 /]# ./iperf -s

The client side executes:

[root@iscsi-target-node1 /]# ./iperf -c 192.168.0.1 -fm -P4

[ 5] local 192.168.0.1 port 5001 connected with 192.168.0.2 port 53407

[ 4] local 192.168.0.1 port 5001 connected with 192.168.0.2 port 53408

[ 6] local 192.168.0.1 port 5001 connected with 192.168.0.2 port 53409

[ 7] local 192.168.0.1 port 5001 connected with 192.168.0.2 port 53410

[ 5] 0.0-10.0 sec 2.87 GBytes 2.46 Gbits/sec

[ 4] 0.0-10.0 sec 2.88 GBytes 2.47 Gbits/sec

[ 6] 0.0-10.0 sec 2.88 GBytes 2.47 Gbits/sec

[ 7] 0.0-10.0 sec 2.86 GBytes 2.45 Gbits/sec

[SUM] 0.0-10.0 sec 11.5 GBytes 9.85 Gbits/sec

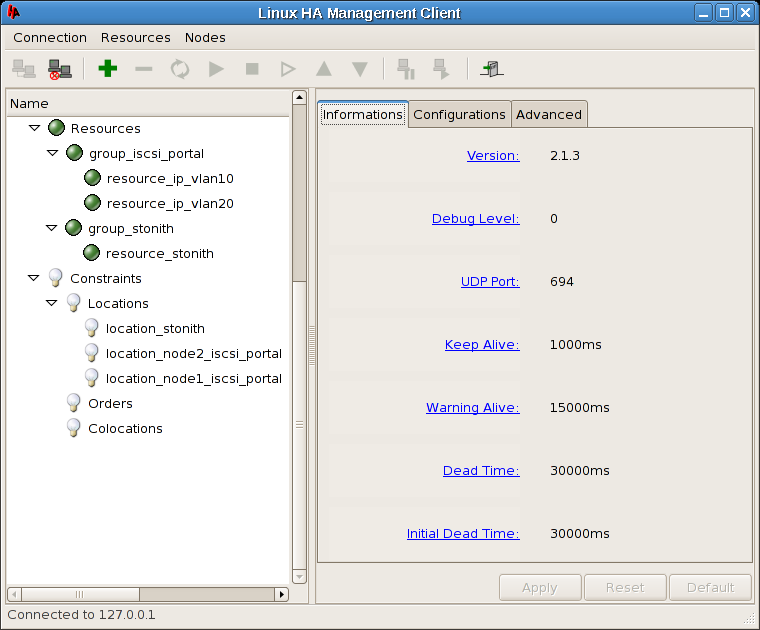

Heartbeat Cluster

The CentOS 5 distribution currently provides heartbeat-2.1.3 [heartbeat]. Use the following commands to install the required packages for heartbeat.

[root@iscsi-target-node1 /]# yum install heartbeat

[root@iscsi-target-node1 /]# yum install heartbeat-gui

|

|

We have found that the heartbeat RPM from CentOS has a %pre script execution problem during the RPM installation. This can be resolved by re-running the yum install heartbeat command a second time. |

Before starting the heartbeat services, several configuration files must be created.

auth 2 2 sha1 somesecretpreferablylong

The following file /etc/ha.d/ha.cf.template is not part of the distribution of hearbeat; however, it is a copy of ha.cf. One of the goals with the heartbeat configuration files is to keep them in sync across nodes. This is in contrast with heartbeat’s cluster resource management which is automatically synchronized across cluster nodes. The template is processed by the ha.cf.make script and it replaces @HA_UCAST1@ variable with node specific unicast interface and IP address specifications.

logfacility local0 @HA_UCAST1@ node iscsi-target-node1.amherst.edu node iscsi-target-node2.amherst.edu ping 148.85.2.1 respawn root /usr/lib64/heartbeat/pingd -m 100 -d 2s -a pingd # # Specifies whether Heartbeat should run v2-style. We set it to on # crm yes

Each cluster node must be specified using the FQDN. Also recommended is a ping node specification, as it allows the cluster nodes to test the reachability of an IP address, the default gateway for example. This is used in conjunction with the pingd and provides a value that can be used as a resource location constraint value. Resources can be pinned to specific nodes based on the reachability of the surrounding network.

The ha.cf.make script is only suited to handle 2 cluster nodes due to the unicast configuration of the heartbeat protocol. Heartbeat also supports a broadcast mode which is more suitable for clusters with more than 2 nodes. In that case this template is not necessary. We initially prefer 2 node cluster to utilize the unicast mode to reduce the amount of broadcasting on the cluster segment.

#!/bin/sh

#

# generate a host specific ha.cf file using uname -n

#

ROOTDIR=$(dirname $0)

cd $ROOTDIR

ha_host=$(uname -n)

case "$ha_host" in

iscsi-target-node1.amherst.edu)

ha_ucast1="ucast eth1 192.168.88.86"

;;

iscsi-target-node2.amherst.edu)

ha_ucast1="ucast eth1 192.168.88.85"

;;

*)

echo "missing hostname definition in $0"

exit 1

esac

if [ ! -e ha.cf.template ]; then

echo "ha.cf.template not found"

exit 1

fi

cat ha.cf.template | \

sed "s/@HA_UCAST1@/$ha_ucast1/" > ha.cf

The cluster node1 is usually used to make all configuration changes. Changes are then propagated using the rsync tool. The following script is commonly invoked to synchronize specific files and directories on the cluster nodes (currently only node2):

#!/bin/sh

NODE2=iscsi-target-node2

rsync -avrR \

--delete \

--files-from=amh_cluster_sync_include.conf \

--exclude-from=amh_cluster_sync_exclude.conf \

/ $NODE2:/

/etc/ha.d/ha.cf.make

ssh $NODE2 /etc/ha.d/ha.cf.make

# cluster /etc/ha.d/ # hosts /etc/hosts # iptables /etc/sysconfig/iptables # sysctl and boot up /etc/sysctl.conf /etc/rc.d/rc.local # iscsi-target (cluster management files) /etc/iscsi-target/ # ip route names /etc/iproute2/rt_tables # lvm /etc/lvm/lvm.conf # multipath /etc/multipath.conf # cron /etc/cron.d/

# iscsi-target excludes /etc/iscsi-target/iet_state/

Cluster Resources

Several cluster resources must be configured to make the iscsi-target host functional:

-

stonith (Shoot the other node in the head)

-

volume group

-

iscsi-target

In case cluster node1 looses its services and node2 considers node1 dead, node2 is designed to run the stonith resource, such that it can power off node1. This becomes more complex when the cluster has more than two nodes.

The volume group resource consists of the volume group and its associated file system that contains target configuration files.

The iscsi-target resource is a multi-instance resource, designed to be running on n number of nodes.

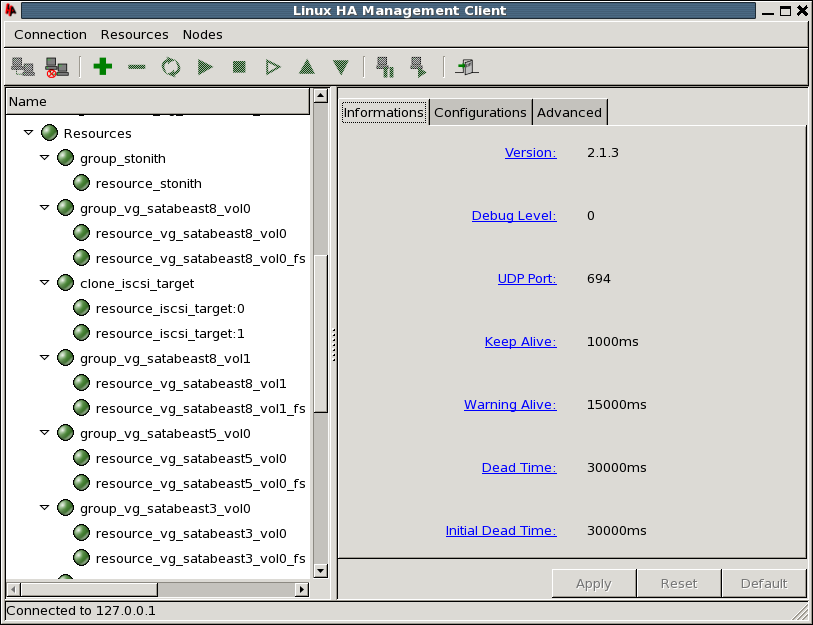

We utilize the heartbeat GUI, called hb-gui, to configure the resources.

The top level item linux-ha in the hb_gui requires the following configuration changes:

-

Enable Stonith

-

Default Action Timeout: 50 seconds

The stonith action is configured to power off a non-functioning node. The default action timeout value must be set to allow the iscsi-target services to cease on service transition.

Resources can be configured individually or as a group. The advantage of a grouped resource, is that one can add other resources into the group and can change the start and stop sequencing. Hence, all resources in this project are contained in resource groups.

Stonith

A resource group named group_stonith must be created and populated with a single resource named resource_stonith. The stonith resource is responsible for powering off a non-functioning node. Since our design consists of two nodes, we only allow node2 to stonith node1. This was chosen because we do not wish for both nodes to engage in a stonith operation at the same time. The underlying stonith code ensures that only node2 can actually perform the operation.

* group_stonith- resource_stonith

Resource group container

Attributes:

ordered: true

collocated: true

target_role: started

| |

stonith resource

Attributes:

Resource ID: resource_stonith

Type: external/stonith_ssh_proxy

Class: stonith

Provider: heartbeat

Parameters:

hostlist: iscsi-target-node1

username_at_host: stonith@stonithhost

ssh_orig_cmd_key: a098sdfsad8f90asdf09s8adf08as

|

We have derived our external/stonith_ssh_proxy resource from the heartbeat provided ssh resource and modified it to ssh into a host that manages power on/off operations of systems. Power operations are can be controlled using Intelligent Platform Management Interface (IPMI). Their interfaces are in a separate VLAN.

It is recommended to create a secure stonith proxy host that has access to the IPMI network. The iscsi-target servers can then ssh into the stonith proxy using a non-root account, followed by a key (this prevents accidental death if you ssh to the stonith proxy from node2):

[root@iscsi-target-node2 /] ssh stonith@stonithhost a098sdfsad8f90asdf09s8adf08as

The configuration of .ssh/authorized_keys2 on the stonith proxy host is as follows:

from="#.#.#.#",no-port-forwarding,no-X11-forwarding,no-agent-forwarding,no-pty,command="./stonith.sh iscsi-targetX-node1" ssh-rsa AAAAB3NzaC1.....hk0= root@iscsi-targetX-node2.amherst.edu

This allows only the node2 to ssh in from …. It will forcibly execute the stonith.sh in the home directory of the stonith user with first parameter iscsi-targetX-node1. By doing this, only a specific node can power off some other specific node, and no other non-related cluster node. The stonith.sh script is summarized as follows:

sys=$1

key=$SSH_ORIGINAL_COMMAND

case "$sys" in

iscsi-targetX-node1)

if [ "$key" == "a098sdfsad8f90asdf09s8adf08as" ]; then

ipmitool -H1.1.1.1 -Uadmin -Psetit chassis power reset

sleep 10

ipmitool -H1.1.1.1 -Uadmin -Psetit chassis power off

fi

esac

Volume Group

The configuration of a volume group resource consists of two resources, namely, the LVM volume group and and a file system resource residing within the volume group. The file system resource consists of configuration components such as ietd.conf, initiators.allow, targets.allow. To emphasize, configuration for ietd actually resides on a file system within a dedicated logical volume named lv_iscsi_target that is part of the volume group resource.

By keeping the configuration information within the volume group, the configuration information migrates along with the volume group when services are transferred from node1 to node2. It is understood that IET keeps its configuration files under /etc/iet; the iscsitarget-tools package provides scripts to automatically manage the configuration data during iscsi-target service state changes.

* group_vg_dsk_0- resource_vg_dsk_0

- resource_vg_dsk_0_fs

Resource group container

Attributes:

ordered: true

collocated: true

target_role: started

| |

LVM resource

Attributes:

Resource ID: resource_vg_dsk_0

Type: LVM

Class: ocf

Provider: heartbeat

Parameters:

volgrpname: vg_dsk_0

| |

Filesystem resource

Attributes:

Resource ID: resource_vg_dsk_0_fs

Type: Filesystem

Class: ocf

Provider: heartbeat

Parameters:

device: /dev/vg_dsk_0/lv_iscsi_target

directory: /mnt/iscsi-target/vg_dsk_0

fstype: ext3

|

Before activating the resource group container, prepare the ext3 file system using the following commands:

[root@iscsi-target-node1 /] lvcreate -L8G -n lv_iscsi_target vg_dsk_0

[root@iscsi-target-node1 /] mke2fs -j /dev/vg_dsk_0/lv_iscsi_target

[root@iscsi-target-node1 /] tune2fs -c 0 -i 0 /dev/vg_dsk_0/lv_iscsi_target

Each configured volume group must also be registered in the ietd_config.sh file, so that the targets_update.sh script can properly manage configured targets.

iscsi-target

The iscsi-target resource is a resource group of type clone because iscsi-target services will be running on n number of nodes. The clone_iscsi_target resource group consists of n resource_iscsi_target:# instances:

* clone_iscsi_target- resource_iscsi_target:0

- resource_iscsi_target:1 - ...

|

Resource group container. Standard resource containers are created before inserting cluster resources. Enable the clone checkbox causes the clone container to be implicitly created during the creation of the iscsi-target resource.

The clone_max defines how many instances of iscsi-target services we are running and it should match the number of nodes in the cluster. The clone_node_max value indicates how many instances of the iscsi-target service can be run on a single node, in our case, one; you cannot run multiple instances of the iSCSI Enterprise Target (IET) on a host. Attributes:

clone_max: 2

clone_node_max: 1

target_role: started

| |

iscsi-target:# service instances

Attributes:

Resource ID: resource_iscsi_target

Type: iscsi-target

Class: lsb

Provider: heartbeat

|

Cluster Resource Locations

Resources if not configured with location constraints will execute on any random node of the cluster. Locations constraints can be assigned to resources or resource groups using runtime conditions such as #uname eq iscsi-target-node2, meaning the resource is allowed to run if the current host matches iscsi-target-node2. If the node is not available, then the resource is forced onto another online node. The condition gives the resource a preferred node.

* Constraints/Locations

- location_stonith  - location_vg_dsk_0

- location_vg_dsk_0  - ...

- ...

stonith is preferred on node2

Attributes:

ID: location_stonith

Resource: group_stonith

Score: Infinity

Boolean: And

Expressions:

#uname eq iscsi-target-node2.amherst.edu

| |

The location of a volume group is assigned by the operator.

Attributes:

ID: location_vg_dsk_0

Resource: group_vg_dsk_0

Score: Infinity

Boolean: And

Expressions:

#uname eq iscsi-target-node1.amherst.edu

|

Cluster Resource Ordering

Since there are two resource groups that provide services, the volume group and the iscsi-target clone resource, it must be ensured that the resource groups start up and shut down in a specific order. This dependency can be enforced using constraint order rules.

* Constraints/Orders

- order_vg_dsk_0_iscsi  - ...

- ...

Each volume group and iscsi-target service requires a constraint order definition. Below we enforce that the resource group named group_vg_dsk_0 is started before the clone_iscsi_target services. The rule is automatically reversed when stopping the services.

Attributes:

ID: order_vg_dsk_0_iscsi

From: group_vg_dsk_0

Type: before

To: clone_iscsi_target

|

Operator’s Manual

The operator’s manual concentrates on the iscsitarget-tools package configuration and program files allowing you to configure and provision targets and LUNs.

The /etc/iscsi-target directory contains the following files:

[root@iscsi-target-node1 /]# ls -l /etc/iscsi-target/ total 216 -rwxr-xr-x 1 root root 373 Jun 14 2010 ietd_cluster.sh -rw-r--r-- 1 root root 1266 Aug 3 2010 ietd_config.sh-rwxr-xr-x 1 root root 9427 Dec 23 21:49 ietd_ddmap.sh -rwxr-xr-x 1 root root 1478 Apr 8 2010 ietd_iptables.sh -rw-r--r-- 1 root root 5561 Jun 16 2010 ietd_lib.sh -rwxr-xr-x 1 root root 2737 Apr 11 2010 ietd_routing.sh -rw-r--r-- 1 root root 2074 Jul 26 2010 ietd_service.sh -rwxr-xr-x 1 root root 22406 Nov 21 22:00 ietd_targets.sh -rwxr-xr-x 1 root root 528 Apr 5 2010 ietd_vg.sh drwxr-xr-x 2 root root 20480 Mar 17 16:35 iet_state -rw-r--r-- 1 root root 453 Feb 21 13:40 iet-tools.conf

-rwxr-xr-x 1 root root 19087 Nov 21 22:13 iet-tools.plx -rwxr-xr-x 1 root root 67 May 20 2010 iostat_top.sh

-rwxr-xr-x 1 root root 3038 Jun 14 2010 lvm_cluster.sh

-rwxr-xr-x 1 root root 2719 Nov 21 22:20 session_top.sh

-rw-r--r-- 1 root root 29432 Mar 16 14:06 targets.conf

-rwxr-xr-x 1 root root 407 Jan 2 22:38 targets_update.sh

-rwxr-xr-x 1 root root 8245 Dec 23 21:59 zfs_tools.sh

| configuration files | |

| shell scripts to be invoked after making changes to configuration files. |

Configuring ietd_config.sh (Global Config)

The ietd_config.sh script contains one-time configuration variables and values and also variables that need to be modified after adding a new volume group resource.

- VGMETA_DIR=/mnt/iscsi-target

-

The VGMETA_DIR variable is utilized when referencing volume group specific data components. The volume group name is appended to VGMETA_DIR and the resulting path must be the mount point of the volume group resource’s file system resource.

Using the example of vg_dsk_0, the resulting path would be /mnt/iscsi-target/vg_dsk_0. Under this location, the iscsitarget-tools scripts maintain several other files and directories such as:

- ddmap

-

Contains volume group related ddmap state information. It is copied from /proc/net/iet/ddmap/… during iscsi-target service shutdown or failover.

- iet

-

Contains volume group related IET configuration files derived from targets.conf such as ietd.conf, initiators.allow and targets.allow.

- lunsize

-

Contains volume group related logical volume sizes that are used to detect LUN size changes.

- targets.d

-

Contains volume group related user programmable/dynamic target configurations. This can be used by other automation processes to generate targets on the fly without modifying the target.conf file.

- target_sessions

-

Contains volume group related targets and their number of sessions per target used by sessions_top.sh.

- zfs

-

Contains volume group related zfs meta data for backup purposes.

- DDMAP_PROC=/proc/net/iet/ddmap

-

The location of the RAM based ddmap data. This location is fixed.

- IPTABLES_PORT=3260

-

The default port for iSCSI traffic. iSCSI traffic is blocked during service shutdown and unblocked during service startup.

- IET_CONF_DIR=/etc/iet

-

The default location for IET configuration files. This location is fixed.

- IET_TARGET_HOSTS="iscsi-target-node1 iscsi-target-node2"

-

Specify the complete FQDN of each cluster member. Use uname -n to determine the proper names. This is used when processing the targets.conf on each cluster node.

- IET_VGS="vg_dsk_0 vg_dsk_1"

-

Specifies the volume groups the iscsi-target cluster manages. Volume groups must be visible on all cluster nodes.

- IET_TARGET_PREFIX="iqn.1990-01.edu.amherst:iet.iscsi-target"

-

This is the prefix for each generated target. Once it is set, do not change it, otherwise iSCSI initiators will be confused.

Configuring target.sh (Targets and LUNs)

The target.conf file is the core configuration file that affects the targets and logical volumes. It consists of several sections that are called upon during configuration update. The configuration update process is invoked via targets_update.sh. This script has to be executed after changing targets.conf.

The targets_update.sh script is expected to be run from cluster node1 and updates the targets.conf file on the other cluster nodes. Then the targets_update.sh executes the callbacks defined below on each cluster node individually. The targets.conf contains the definition of all targets across all nodes.

Given two volume groups, vg_dsk_0 on node1 and vg_dsk_1 on node2, the target.conf file will have configuration lines referring to both volume groups. When node1 processes the target.conf it will only process logical volumes residing on the active volume group vg_dsk_0. When node2 processes the same target.conf file, it will only process logical volumes residing on the active volume group vg_dsk_1.

The target.conf file is treated as a bash shell script.

The following section outlines the implemented function callbacks:

- conf_global

-

Global configuration options

- conf_target_options

-

Target options

- conf_target_callback_pre

-

Callback before target is created

- conf_target_callback

-

Callback when target is created

- conf_targets

-

Configure Targets and LUNs

targets.conf: conf_global

The conf_global function is invoked to establish configuration values that are also considered global in ietd.conf. Refer to the man ietd.conf help page for more information.

The shell function option followed by a native IET option can be invoked. Multiple option invocations are supported. For example:

function conf_global

{

option ImmediateData Yes

option TgtNotFoundRespondServiceUnavailable Yes

}

The TgtNotFoundRespondServiceUnavailable is not a standard IET option; details of this enhancement are described here: IET Patch: iet-07-tgtnotfound-svcunavailable

targets.conf: conf_target_options

The conf_target_options section could be made part of conf_global, however, it was created to possibly make changes on a target specific basis in the future.

The shell function option followed by a native IET option can be invoked. Multiple option invocations are supported. For example:

function conf_target_options

{

option InitialR2T No

option ImmediateData Yes

option MaxRecvDataSegmentLength 262144

option MaxXmitDataSegmentLength 262144

option MaxBurstLength 262144

option FirstBurstLength 65536

# do not close connections on initiators, let the tcp stack time things out

option NOPInterval 0

option NOPTimeout 0

}

targets.conf: conf_target_callback_pre

The conf_target_callback_pre function is invoked before processing the target configuration under conf_targets. It is currently used to for Solaris ZFS backup support. The VGMETA_DIR/vg/zfs/backup file is initialized.

function conf_target_callback_pre

{

local vg="$1"

mkdir -p "$VGMETA_DIR/$vg/zfs"

> "$VGMETA_DIR/$vg/zfs/backup"

}

targets.conf: conf_target_callback

The conf_target_callback function is invoked while processing the target configuration under conf_targets. It is currently used to include or exclude targets for ZFS backup processing. Targets to be included in Solaris ZFS backups must be appended to VGMETA_DIR/vg/zfs/backup.

function conf_target_callback

{

local dev="$1"

local vg="$2"

local lv="$3"

case "$lv" in

*_zfssnap)

echo "callback> zfssnaps $vg/$lv are backups we are processing"

;;

lv_srvrux69_digitool_bkup_vol00)

echo "callback> no backup"

;;

*)

echo "$vg/$lv" >> "$VGMETA_DIR/$vg/zfs/backup"

;;

esac

}

targets.conf: conf_targets

The conf_targets section is critical to defining and managing targets and LUNs.

|

|

A target definition only supports single LUN. This decision had to be made, because we cannot support a target with multiple LUNs residing on different volume groups. The reason is that one can migrate a single volume group within a cluster. A target must have access to all of its configured LUNs from volume groups that are active on the current cluster node. |

The shell function target followed by several parameters can be invoked. Multiple target invocations are supported.

target up /dev... blockio AMHDSK- pg_vlan10_fabric "$ig_srvr_name"

- target

-

The target function being invoked.

- up|down

-

Specify up to bring the target online. Specify down to remove the target; connections with initiators will be lost.

- /dev…

-

Specify a path to a logical volume using the format: /dev/vg/lv. Only logical volumes are supported with the iscsitarget-tools package.

- blockio|fileio

-

Standard IET Type option, fileio uses Linux page-cache, blockio bypasses the Linux page-cache.

- AMHDSK-yymmdd-nn

-

Specify the LUN’s serial number, both IET ScsiId and ScsiSN are configured with this option. It is recommended to define a string with a maximum length of 16 characters.

- pg_vlan10_fabric

-

Specify the IET_PORTAL_GROUP value indicating which network interfaces service this target.

- "$ig_srvr_name"

-

Specify the initiator name(s) that can access this target. Use a space to separate multiple initiator names and ensure to quote the entire string so that it appears as one argument.

|

|

A target is exposed via specific network interfaces, also known as portals. The exposed target then requires access control to ensure that permitted initiators can access the target. Access control can be accomplished using the initiator IP addresses or initiator name (IQN). Utilizing the initiator name means maintaining a single value. This is also crucial when working with the iscsi-portal, it will impersonate the initiator using the initiator name to query for available targets. |

|

|

CHAP could be used for authentication, however, we have found problems with initiators not being able to properly reconnect under load once a network connection was broken. The iscsitarget-tools package already supports the incoming and outgoing user commands. Invoke the shell function in the targets.conf file: user in|out username secret |

A functional example:

#---------------------------------------------------------------------- # server# servername (Comment) #---------------------------------------------------------------------- ig_server01_apps="iqn.1994-05.com.redhat:swiscsi.server01.apps" target up /dev/vg_dsk_0/lv_server01_apps_vol00 blockio AMHDSK-100415-01 pg_vlan10_fabric "$ig_server01_apps"

The resulting iSCSI target IQN (iSCSI Qualified Name) is derived from the IET_TARGET_PREFIX, the IET_PORTAL_GROUP, the volume group and the logical volume name. When the target configuration is processed, the target’s IQN is displayed:

tgt> tgt_name: iqn.1990-01.edu.amherst:iet.iscsi-target.pg-vlan10-fabric.vg-dsk-0.lv-server01-apps-vol00 (up) tgt> lun: 0, path: /dev/vg_dsk_0/lv_server01_apps_vol00 lun size: 1024.00G

Operator Commands

The following section explains commonly used operator commands. There are several other invokable shell scripts located under /etc/iscsi-target, many of them are used internally when starting and stopping iscsi-target services.

targets_update.sh

After making changes to the targets.conf file, invoke targets_update.sh script from node1:

[root@iscsi-target-node1 /]# ./targets_update.sh

This will first synchronize the cluster configuration files, specifically targets.conf and then invoke on each cluster node the ietd_targets.sh script to configure targets and logical volumes. It will only work on logical volumes, hence targets, that have an active volume group on the current node.

New targets are dynamically added. Current online targets flagged as offline will be removed; this does not remove the underlying logical volume, it only removes the target configuration. Logical volumes that have changed size are taken offline and online to allow IET to see the new volume size. Initiator connections are forced to re-establish their connections.

lvm_cluster.sh

Logical volumes must be managed using the lvm_cluster.sh provided command. The logical volumes of a volume group are only active on one cluster node. Hence, the operator would have to know which volume group is active on which node. The lvm_cluster.sh script handles that instead. Without any arguments the usage is provided:

[root@iscsi-target-node1 /]# ./lvm_cluster.sh

Usage: ./lvm_cluster.sh {vgs|vgdisplay|lvs|lvscan|lvcreate|lvextend|lvremove}

The lvm_cluster.sh script understands the above LVM commands and extracts based on their arguments the referenced volume group. Once the volume group is determined it executes the LVM command on the proper cluster node.

Operator Tasks

The following section explains commonly used operator tasks, such as adding and removing volumes.

Add a new volume

Before creating new volume the operator must decide in which volume group to create the volume. The volume group selection can be based on available space or based on how much IO the logical volume is expected to receive. It is better to distribute high volume IO logical volumes across different volume groups. Use the vgs command shown below to determine the available volume group space:

[root@iscsi-target-node1 /]# ./lvm_cluster.sh vgs

iet_host: iscsi-target-node1.amherst.edu

VG #PV #LV #SN Attr VSize VFree

vg_dsk_0 1 25 1 wz--n- 16.37T 7.58T

iet_host: iscsi-target-node2.amherst.edu

VG #PV #LV #SN Attr VSize VFree

vg_dsk_1 1 13 0 wz--n- 16.37T 5.98T

Using the data from the above volume group output, vg_dsk_0 has the most space available. The logical volume with a size of 1024G and a name of lv_server01_apps_vol00 on the volume group vg_dsk_0 is created next:

[root@iscsi-target-node1 /]# ./lvm_cluster.sh lvcreate -L1024G -n lv_server01_apps_vol00 vg_dsk_0

Once the logical volume is created, it must be configured in the target.conf file:

#---------------------------------------------------------------------- # server# servername (Comment) #---------------------------------------------------------------------- ig_server01_apps="iqn.1994-05.com.redhat:swiscsi.server01.apps" target up /dev/vg_dsk_0/lv_server01_apps_vol00 blockio AMHDSK-100415-01 pg_vlan10_fabric "$ig_server01_apps"

The above target configuration specifies that the target should be brought online. It uses the logical volume device path with blockio and a volume serial number of "AMHDSK-100415-01" exposed on network interfaces that are associated with pg_vlan10_fabric tag and allowing the initiator specified in ig_server01_apps access.

The ig component of the initiator variable means "initiator group" as multiple initiators can be allowed access to a target. This applies to clustered targets, such as VMware ESX/i clusters, for example. Separate multiple initiators with a white space.

Once configured, make the target active and online.

[root@iscsi-target-node1 /]# ./targets_update.sh

tgt> tgt_name: iqn.1990-01.edu.amherst:iet.iscsi-target.pg-vlan10-fabric.vg-dsk-0.lv-server01-apps-vol00 (up)

tgt> lun: 0, path: /dev/vg_dsk_0/lv_server01_apps_vol00 lun size: 1024.00G

Connections to the target can be verified by looking at the contents of /proc/net/iet/session. The target and volume configuration can be verified by looking at the contents of /proc/net/iet/volume.

Offline a volume

Taking a volume offline will disrupt the network traffic with the initiator. It is important to ensure that the initiator has closed active sessions before commencing with the next step:

#---------------------------------------------------------------------- # server# servername (Comment) #---------------------------------------------------------------------- ig_server01_apps="iqn.1994-05.com.redhat:swiscsi.server01.apps" target down/dev/vg_dsk_0/lv_server01_apps_vol00 blockio AMHDSK-100415-01 pg_vlan10_fabric "$ig_server01_apps"

| note the keyword down. |

Update the configuration:

[root@iscsi-target-node1 /]# ./targets_update.sh

tgt> tgt_name: iqn.1990-01.edu.amherst:iet.iscsi-target.pg-vlan10-fabric.vg-dsk-0.lv-server01-apps-vol00 (down)

The related volume and sessions should not appear any more in /proc/net/iet/session and /proc/net/iet/volume.

Removing a volume

To remove a volume, first offline it. This step is described in the previous operator task. Once the volume is offline, the logical volume can be removed. Ensure that there is a backup before removing the logical volume.

[root@iscsi-target-node1 /]# ./lvm_cluster.sh lvremove /dev/vg_dsk_0/lv_server01_apps_vol00

Change volume size

Volume sizes should only be increased, otherwise data loss may occur. IET does not natively support this operation, hence iscsitarget-tools tracks the volume sizes. When a volume size change has been detected, the volume is temporarily taken offline and brought back online. Initiators will have to reconnect at that point. At the initiator side, the operator can rescan devices to see the device size changes.

The following example adds 500 GB of disk space to the logical volume:

[root@iscsi-target-node1 /]# ./lvm_cluster.sh lvextend -L+500G /dev/vg_dsk_0/lv_server01_apps_vol00

Update the configuration:

[root@iscsi-target-node1 /]# ./targets_update.sh

Change the portal group

To change the volume’s portal group, first take the volume offline, change the portal group assignment and then configure the volume as online. These tasks have been described previously. Changing a target’s portal group may be required when exposing the target to a different VLAN, for example.

Status Display: IO Top

The I/O performance values can be monitored in real-time using the iostat_top.sh script. It utilizes the lvm_iostat tool to translate device mapper paths such as dm-27 to proper logical volume paths. The display is updated every 2 seconds and processes the data from /proc/diskstats. Several specific data columns are presented:

22:38:56 up 73 days, 27 min, 5 users, load average: 1.30, 3.20, 3.53

device displayname read write

dm-4 mpath_vg_satabeast3_vol0 7786014208 7.3G 353500160 337M

dm-5 mpath_vg_satabeast3_vol1 8446123520 7.9G 447481344 426M

dm-3 mpath_vg_satabeast5_vol0 7358440448 6.9G 2289664 2.2M

dm-6 mpath_vg_satabeast8_vol0 87874743893504 79.9T 31565047747584 28.7T

dm-2 mpath_vg_satabeast8_vol1 83430106234880 75.9T 15029405897216 13.7T

dm-0 vg_sata0-lv_os_root 4648768512 4.3G 260904964096 242G

dm-1 vg_sata0-lv_os_swap 446464 436k 0 0

dm-25 vg_satabeast8_vol0-lv_csc0_gfs_vol00 0 0 0 0

dm-27 vg_satabeast8_vol0-lv_csc1_gfs_vol00 72137330688 67G 10572254326784 9.6T

dm-17 vg_satabeast8_vol0-lv_iscsi_target 139301888 132M 13412704256 12G

dm-54 vg_satabeast8_vol0-lv_srvrnt09_storage_p_vol00 209945753600 195G 134006331392 124G

dm-52 vg_satabeast8_vol0-lv_srvrnt19_dcs_e_vol00 81667016704 76G 144084541952 134G

read/s write/s #io #io[ms] read[bs] write[bs]

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

100k 209k 2 101 14628 4290

267k 1.0M 0 79 136704 8610

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

- read

-

number of bytes read, both in units of bytes and human readable format.

- write

-

number of bytes written, both in units of bytes and human readable format.

- read/s

-

number of bytes read within a one second period

- write/s

-

number of bytes written within a one second period

- #io

-

number of I/Os currently in progress

- #io[ms]

-

number of milliseconds spent doing I/Os.

- read[bs]

-

approximate read blocksize within a one second period.

- write[bs]

-

approximate write blocksize within a one second period.

|

|